Agents Need Microservices

As I wrote last month, LittleHorse helps ensure alignment between your business goals and your software, acting as a Command Center for Business-as-Code. AI Agents are starting to unlock the production of vastly greater amounts of code than ever before, making this alignment and governance even more crucial.

While good and useful software can be produced by LLM's, I don't believe in wasting people's time by asking them to read AI slop. This blog was written entirely without the aid of LLM's.

In December 2021, I set out with my father to build the best harness for microservices that the world had ever seen. Agentic AI, and especially vibe-coding, was a monkey wrench that none of us could have predicted in 2021. The question every architect must face now is, "how will my architecture change because of agentic development?" It turns out that we're barreling straight for a world that will be saturated with microservices in need of a Command Center.

After much study (at the terminal, in the field, reading papers), I believe that:

- AI-assisted coding will result in far more software being written: things that used to not make sense to automate are suddenly in-play.

- This explosion of additional automation will naturally produce many small pieces of independently-deployable software which accomplish small tasks: microservices.

- The primary focus of software architects and engineers will shift to harnessing microservices and understanding business requirements. We'll have to understand, verify, and maintain much larger systems than before.

A microservice is a small piece of software that is developed and deployed in-house to provide a small set of functionality. Microservices allow different pieces of software to be deployed independently, but they come at the cost of distributed complexity.

Increasing Automation

In economics, Jevon's Paradox posits that, when a market becomes able to use a resource more efficiently, the market ends up using more of that resource. This is because greater efficiency makes certain transactions, which were previously unprofitable, profitable.

Hobbyist Projects

In the context of agentic coding and software, we will see vastly more code being produced by even more engineers. Commit data from GitHub corroborates this increase in total software written, and we've even seen an uptick in software job postings over the last three months since Opus 4.5 (and now 4.6) was released. (For you Codex fans out there, I'm not trying to argue which model is better. Just don't use GPT 3.5 please.)

The first consequence of the cost of writing code* reaching zero is summarized by Jevon's Paradox: many things which were too costly to automate are now in-play. On the "hobbyist" side of things, I've seen business analysts vibe-coding data portals and dashboards, non-technical founders take over the maintenance of their website, and a litany of vibe-coded personal productivity apps (just open Twitter and you'll see dozens).

Enterprise Automation

However, the productivity gains from agentic coding are not limited to just vibe-coded toys. Many enterprise projects which were previously too ambitious are now in play. In the field, we've seen:

- A bank has achieved a 10x speedup in migration from COBOL to Java (yay Java!).

- A logistics company built an in-house fleet management system using LittleHorse, saving money by canceling several SaaS and iPaaS subscriptions, and enjoying a more custom-built solution.

- HR and Accounting firms used LittleHorse and agentic coding to build custom integrations between back-office SaaS apps.

Without microservices and AI, none of these wins would have been possible. This trend will only accelerate.

Writing code and building digital solutions to business problems are two distinct problems.

Harnesssing Agent-Driven Code

In a world where Claude writes vast quantities of code at a rate far faster than we can review it, how will we avoid the so-called "AI Slopocalypse?" We will create harnesses to give our enthusiastic robotic friends the best chances for success:

- Break up your system into smaller components that agents can easily reason about, work on independently, and deploy independently (cough microservices cough).

- Use a harness that allows you to easily compose the code produced by your agents and integrate with the legacy stack.

- Most crucially, be able to audit and govern the Frankensystem that your agents build for you with the Saddle Command Center for microservices.

Agents Need Microservices

Even though LLM context windows continue to grow, agents—just like the smartest humans—have a higher chance of success when given a smaller problem to work on. The chances of success skyrocket when you give the agent the ability to verify that it arrived at the correct answer in a loop. I've noticed that our good friend Claude can be quite persistent when you give him Opus 4.6 and a way to check his answer.

Also, the speed at which agents can deliver software scales linearly with the number of tasks that can be worked on in parallel. By using microservices, a single large problem ("write a custom ERP system for me") can be broken into manageable and parallelizable tasks, enabling great speedup.

Lastly, breaking problems down into smaller chunks such as microservices, or better yet Task Workers in LittleHorse, makes it easier to define verification steps for each task given to agents.

Microservices Need a Harness

As I wrote two years ago, microservices can quickly devolve into an unsupervised and chaotic mess. While it's easier to reason about a single microservice than about an entire monolith, teams are still left with the struggle of piecing the microservices together into a cohesive system.

LittleHorse solves this problem by providing a Command Center to compose, connect, and govern your microservice flows. It gives you:

- A catalog of each task or microservice, including API contracts (inputs and outputs).

- Pre-built durability primitives, such as retries and failure handlers.

- A safe, type-checked, and auditable way to compose multiple microservices into working flows.

For more info about LittleHorse and microservices, check out my Integration Patterns series.

Auditing & Compliance

We'll soon see agents producing code at a rate that far surpasses human review capacity. When this happens, how will we understand our system? More importantly, how will we pass security audits and prevent disastrous business process bugs? Some say "just ask Claude to write documentation." However, docs can drift, and LLM's can still make mistakes.

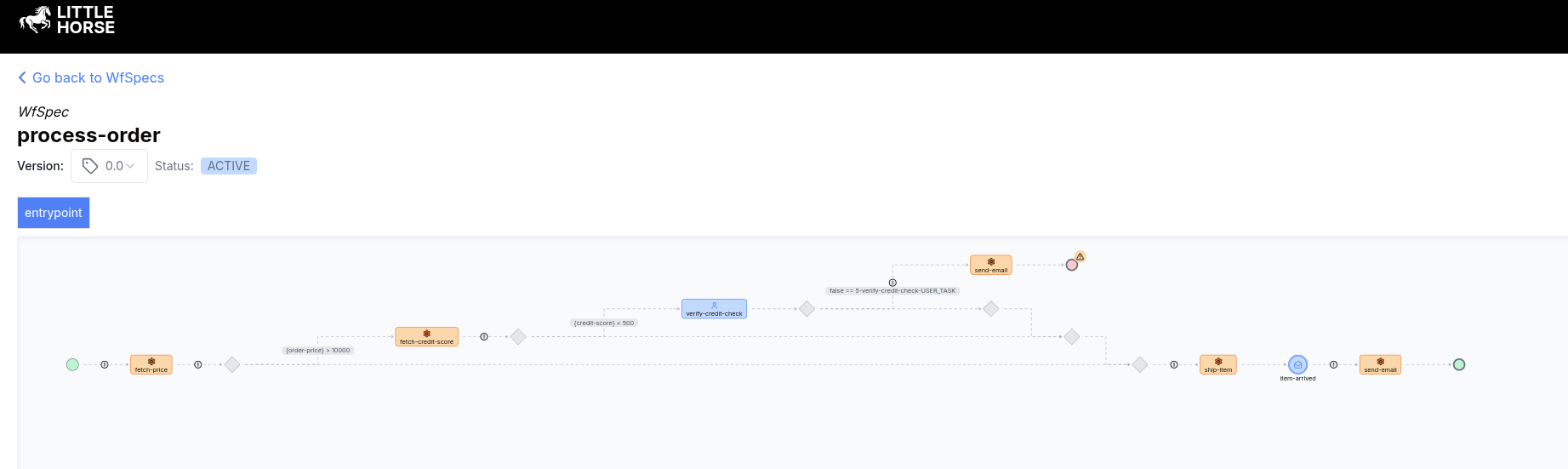

Using a microservice harness like LittleHorse provides a really elegant solution: the dashboard allows you to deterministically visualize the end-to-end flow of your system simply by projecting the code written by your agents.

Even better, every interaction is logged, traced, and indexed in LittleHorse so that you can easily chase down bugs and security incidents, and allow your system to self-heal. Claude is already quite capable at debugging LittleHorse workflows using lhctl and this capability will only get better.

While it might be hard to understand a production-ready order-processing microservices architecture, it's simple to reason about your vibe-coded business logic when it's displayed on the LittleHorse dashhboard as follows:

Conclusion

2026 is quite an amazing time to be alive. I would be lying if I told you that I had vibe-coding and AI Agents in mind when I founded LittleHorse in 2021; it just so happens that a microservice harness is even more crucial in 2026 than it was four years ago.

This post has been very forward-looking, but as we near the release of the Saddle Console and version 1.0 of the LittleHorse Server, I'd like to take a minute to look back. Working on LittleHorse has been a blessing in so many ways, but nothing has been better than getting to know my team members, advisors, and other folks I've met along the way.

I'm deeply proud of my team. They've taught me so much about life, leadership, software, project management, business, and so many other things, and many of them have become my close friends. As we've had our first few big customer success stories with LittleHorse, I'm filled with pride for what we've built, and I'm excited to see what we will do this upcoming year.

To the Worried Engineer

As with every seismic shift, there will be winners and losers. The winners of the AI era will be people who can think at a high level of abstraction with agency (pun very much intended). Unfortunately, though, folks who made their career off of solving small, well-written JIRA tickets will need to adapt to working at a much higher level of abstraction. They'll need to begin engaging business stakeholders directly, they'll need to understand the business value of what they're building, and they'll need to become accustomed to managing much larger systems.

In short, I think the era of making 6 figures as a "CRUD Monkey" is over.

For those of us who can adapt to the new world—by thinking backwards from business problems while still understanding fundamental architecture, by letting go of the need to micromanage syntax, by inventing better solutions rather than copy-n-pasting from StackOverflow—our window of opportunity is just opening. We now have an army of little personal assistants that can do the un-differientiated stuff for us. Now, software engineers can finally be true "engineers." We are blessed to live in these times.

Further Reading

Three papers in particular have been quite formative to writing this essay:

- Anthropic's Claude Code succeeded in writing a compiler in 2 weeks without human intervention.

- OpenAI's Codex Team blogged about Harness Engineering.

- Chris Lattner, the creator of LLVM, wrote about Anthropic's C Compiler experiment.