Concepts

You can think of the LittleHorse Kernel as not just a Workflow Engine but also a distributed programming runtime that lets you write and run distributed programs as if you were programming a single computer.

What makes LittleHorse unique among workflow engines is that it is built with developers and distributed systems in mind. We designed LittleHorse from the ground up to be developer-friendly and have concepts that are analogous to the primitives used in day-to-day programming languages, allowing you to build real-time, scalable, and reliable applications with ease.

Core Concepts

The core concepts of LittleHorse are as follows:

- The LittleHorse Kernel is the digital kernel or scheduler in LittleHorse. The LittleHorse Kernel runs somewhere (in the cloud, in your environment, etc) and exposes a grpc API to clients. It is the distributed analog of the JVM in our analogy to Java.

- A Task Definition (

TaskDef) is a blueprint for a single unit of work that can be executed in LittleHorse. It is analogous to a method signature in Java. - A Task Worker (

LHTaskWorker) is a long-lived process that executesTaskDefs. A Task Worker is code that you write, which uses our SDK to connect to LittleHorse, listens on a Task Queue, and executes a function that you wrote every time LittleHorse puts a task on the queue. - A Workflow Specification (

WfSpec), defines the logic of a process that is executed in LittleHorse. It is similar to a program in Java. - A Workflow Run (

WfRun) is a running instance of aWfSpec. It is similar to a running instance of a program in Java.

Inside a WfSpec, LittleHorse provides all of the primitives that you expect in a programming language, including variables, conditionals, threads, exception handlers, and interrupts. The only difference is that they built for a distributed environment.

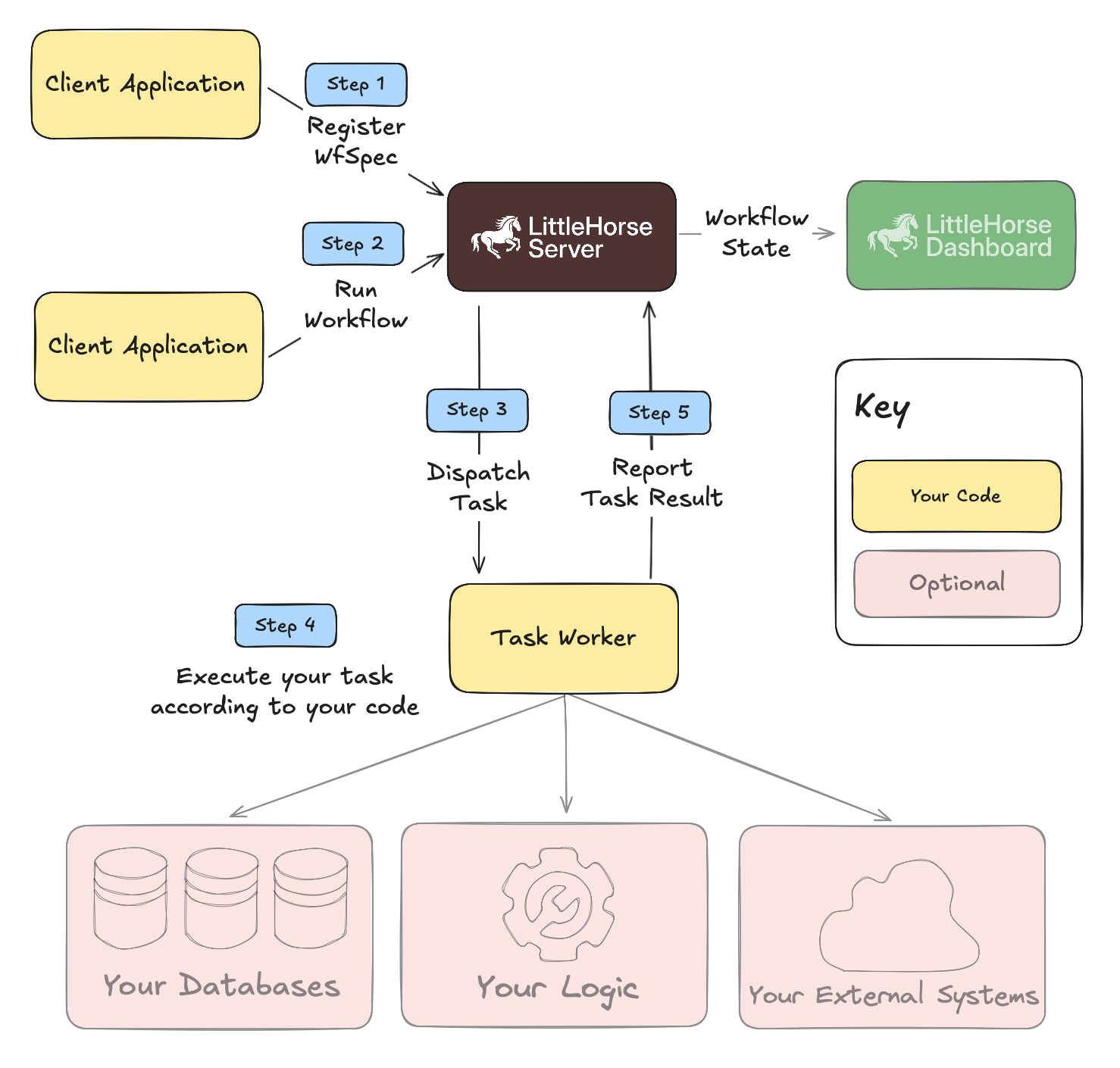

When you run a WfSpec, you get a running instance of a WfRun. As your WfRun progresses through the WfSpec and encounters a Task Node, LittleHorse dispatches a Task Run to an appropriate Task Worker. Once the Task Worker executes the Task Run and reports the result to LittleHorse, the LittleHorse Kernel advances the WfRun to the next step in the Workflow Specification.

The Development Process

As a user of LittleHorse, you need to write and deploy code that does the following:

- First, you need to write Task Workers, which are long-lived processes that execute your code (Task Methods) when an appropriate

TaskRunis scheduled in LittleHorse. Task Workers need to be able to open a network connection to the LittleHorse Kernel. - You must write code that defines a

WfSpec. This code is only executed once, and it creates a JSON/Protobuf representation of a workflow. This representation is registered to LittleHorse and tells the LittleHorse Kernel what steps are involved in your process. - Lastly, you write code that tells LittleHorse to Run a

WfSpec. Often, this is a Web Server that accepts REST requests (eg.POST /order) or consumes from a stream (eg. an Apache Kafka topic) and runs aWfRunfor every trigger event.

The real magic of a workflow engine is in what happens after you run your workflow. Without a workflow engine like LittleHorse (and especially when building microservices), it's really hard to glue things together. And in consequence there's no visibility of what's happening between the start and end of the process, and if something goes wrong you can neither recover nor identify the causes.